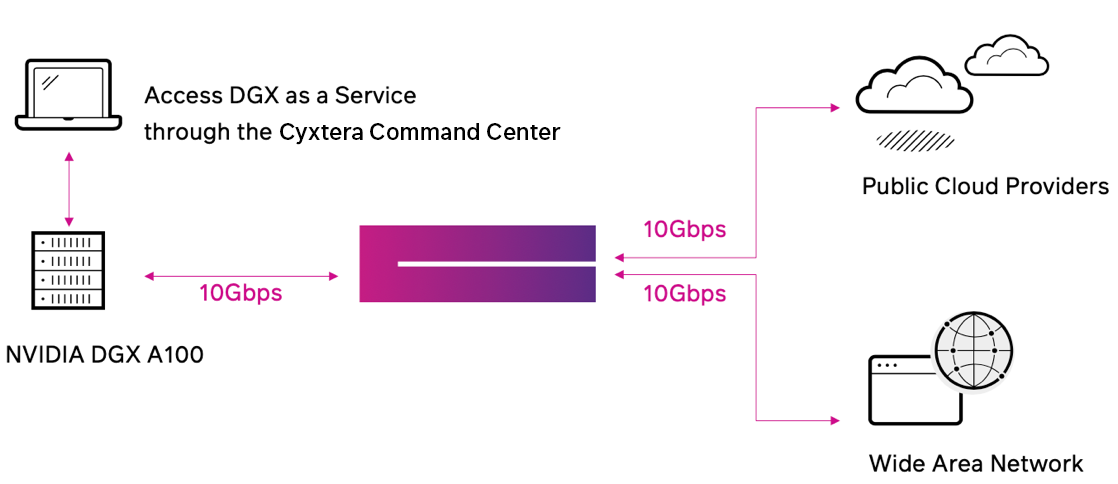

AI/ML Compute as a Service

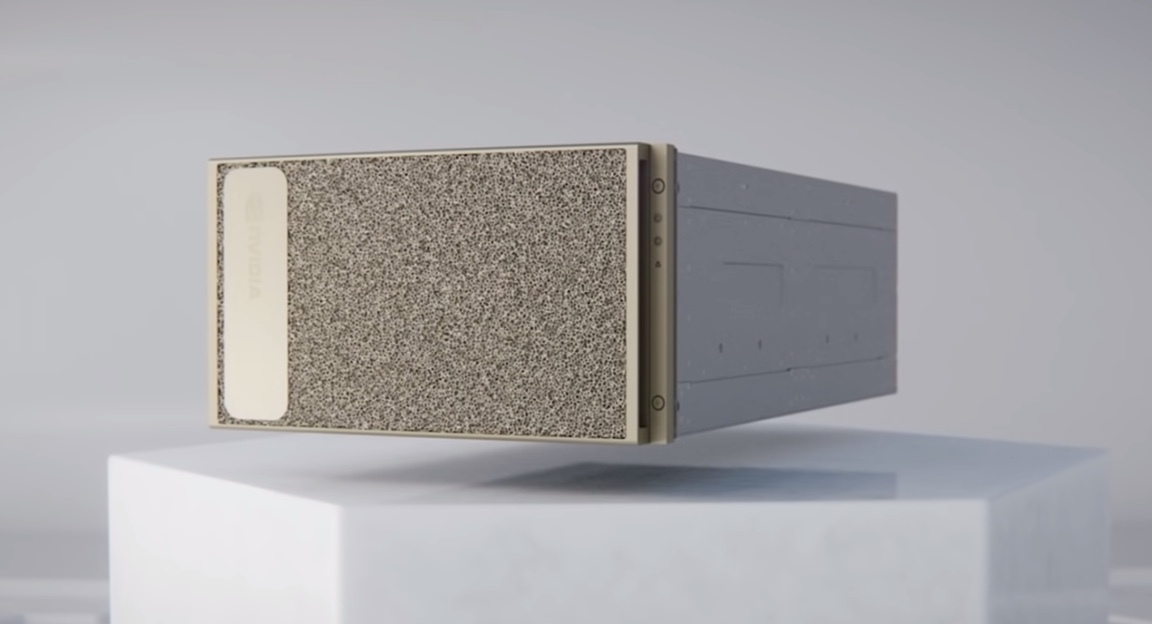

Accelerating AI and Machine Learning Implementation with NVIDIA DGX™ A100

Cyxtera’s AI/ML Compute as a Service, powered by NVIDIA DGX A100 systems, enables AI/ML-powered workloads to be deployed with great agility and speed. Provisioning of AI and ML is made easier through our as-a-service model that eliminates the need for large capital outlays and over provisioning.